What is Context Engineering and why does it matter?

The newest concept in AI that you and your team need to understand

Be the first to know about my next free workshop on shipping with AI. Follow me on YouTube for in-depth tutorials.

Table of contents

• What is Context Engineering?

• Examples of “Context”

• How did we get here?

• The Context is the Product

• Use Cases and Ideas

What is Context Engineering?

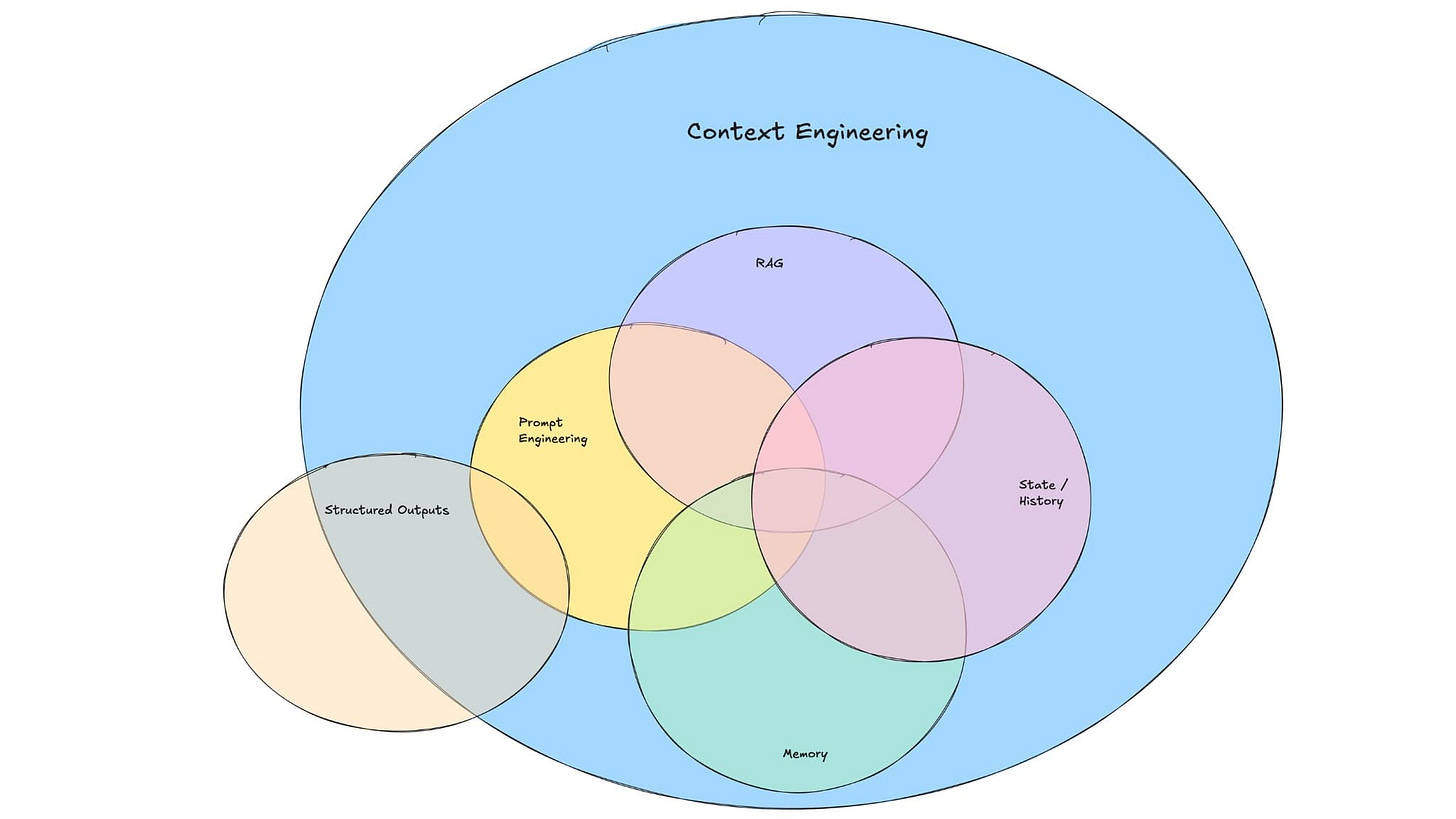

Context Engineering has recently emerged as a concept related to Prompt Engineering, but much broader in scope. While Prompt Engineering refers to the practice of refining the specific prompt the user provides to an LLM, Context Engineering refers to all of the ways context can be provided to an LLM.

It’s a useful distinction because there are many ways to provide context to the model. Some can be provided manually, while others can be automated. If you read my articles, you should know that I firmly believe that the quality of the context provided to the model is the best indicator of the output quality you’ll receive and, thus, is incredibly important to get right.

Examples of “Context”

Context, in short, is the information the model uses to understand and generate output. This is typically natural language, but it doesn’t have to be. Here’s a few examples of context that a model might consume:

System instructions (the role or high-level directives given to the model)

User prompt or query (the immediate question/task)

Short-term memory / conversation history (prior interactions in a chat)

Long-term memory (stored knowledge or facts preserved across sessions)

Retrieved external information (documents, database records, or web results fetched via Retrieval-Augmented Generation)

Available tools and their outputs (functions the model can call, and the results returned)

Structured output format requirements (schemas or examples that guide the model’s answer structure)

Reading through this list, you might be thinking to yourself, “…aha, that’s why they are calling it context engineering.” There’s enough types of context that rather complex systems can be designed and engineered specifically for providing the highest quality context to the model in an automated fashion.

How did we get here?

Here’s a rough, low precision timeline of how we arrived at Context Engineering:

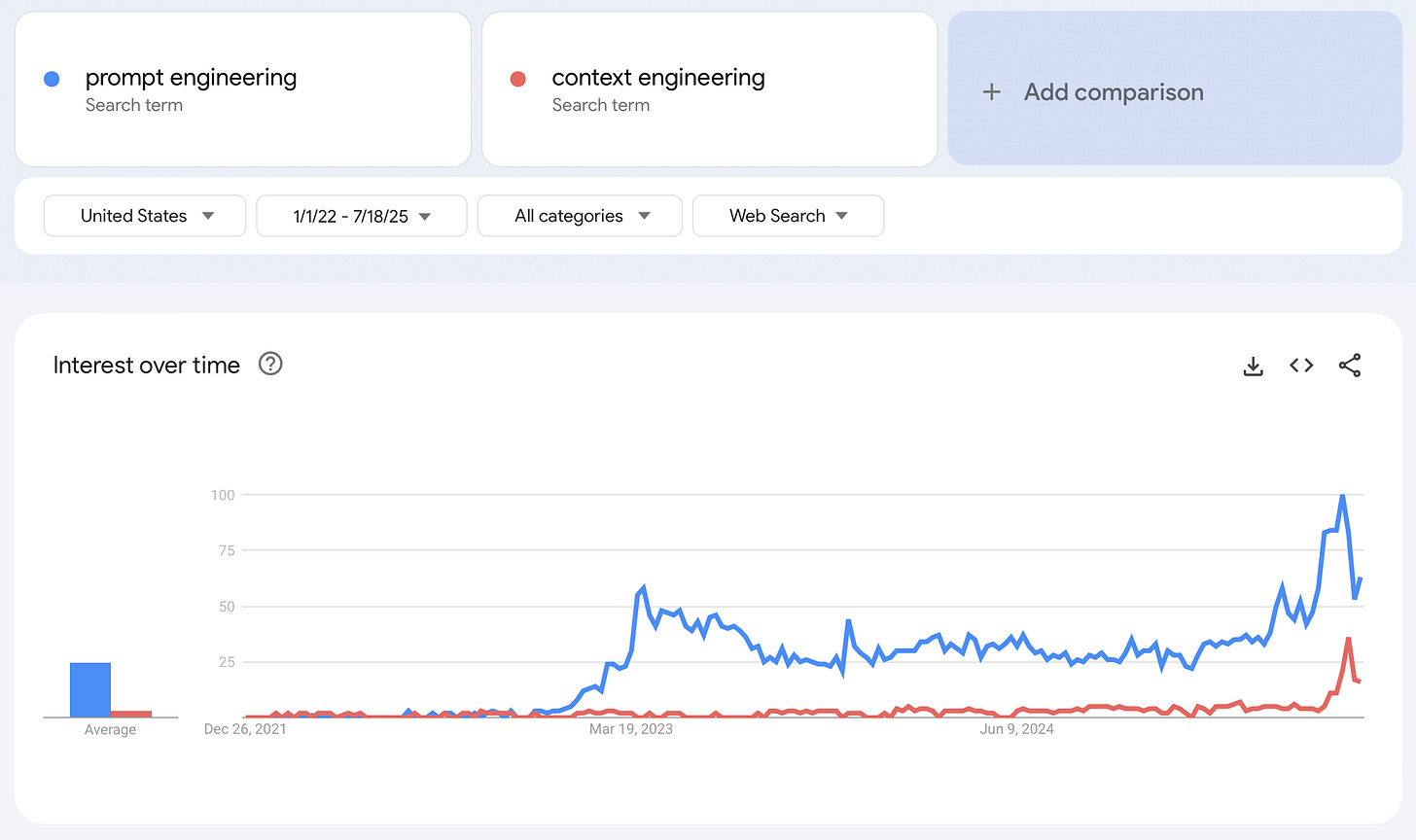

2022 → Prompt Engineering emerges as a critical technique for coaxing desired output from models.

2023 → The community begins to acknowledge limitations of prompt engineering and explore more sophisticated techniques.

2024 → Retrieval augmented generation (RAG) becomes popular, model memory becomes table stakes for foundation model products, tool use begins to emerge.

2025 → All the aforementioned techniques get bundled into a single concept called Context Engineering.

The Context is the Product

It’s hard to underscore enough just how important context is in an LLM-driven application. When I’m using Cursor, for example, the file context I provide to the system has a direct impact on the quality of the code that is generated. In ChatGPT, the memory it has of my past conversations have a direct impact on the models ability to connect my question to other ideas we have explored together, and help me connect the dots between concepts.

One way to illustrate the importance is with a simple thought experiment (illustrated above). Let’s imagine two products that are identical from a UI / UX perspective. They are both LLM-driven, chat-based products. They basically look like ChatGPT, however they have two very different use cases:

Tutoring → help me learn and retain academic material

Therapy → help me understand myself, others, and grow as an individual

So the two products are identical in every way, except that they create and maintain very different contexts in the background while I interact with them. In the case of the Tutoring app, it stores things like:

Past lessons

My interests

Areas and topics I can improve in

My learning style

Projects I have worked on

Evaluations of my performance and my grades

While the Therapy app stores things like:

A list of my relationships

My personal wins and challenges

Goals I am working towards

Past traumas and triggers

My communication style

In this thought experiment the context is the only difference between the products. It is the context that enriches everything I say or type into the product, and allows the product to respond in a way that is relevant to its use case.

On some level this isn’t really a thought experiment. It’s just how many products are going to be designed in the near future. It’s also how many agents are going to be designed. Context Engineering is a competency that will allow builders to design and engineer high quality agents.

Use Cases and Ideas

I’ll leave you with three ideas for how you might apply Context Engineering to new products. I hope these stimulate some interesting conversations for you and your team. Let me know what you build!

App Idea → Context it needs and how it might use it

Email & Calendar Agent → The agent could pull into context your emails, relationships to contacts, and your upcoming schedule. Using this context it could draft emails with relationship-appropriate tone, and schedule bookings at times you are available.

Creative Writing Assistant → The product might maintain a “world state” for all the characters, backstories, and narrative arcs explored in the writing. It could use this context to point out plot holes to the writer, or suggest possible story lines that the writer might want to explore.

Project Management Assistant → The context might include data on projects from tools like Trello, Notion, or Jira. It could also include project-relevant chat context from Slack, Teams, or email. Combining this information, the assistant could generate and broadcast accurate status updates across a portfolio of projects.

You might be interested

I hope this helps! Definitely let me know if you have any questions or comments. If you’ve made it this far, here’s a few things you might be interested in:

I periodically hold workshops focused on building with AI. Get notified when I have the next workshop.

I offer 1:1 paid coaching and consultations. Email me directly to inquire at andrew@distll.ai

Great article, still pretty new to all of this but something that tripped me up at first was conversation context being too small. I kept running out of room in a conversation so I started ending each conversation with summarizing that previous one then starting the next conversation with that summary. Recently started using Claude projects and am so excited about how the project knowledge fixes this. At the end of the conversation I still do the summary but just add it to project knowledge. Then any future conversations in the project have that context. Excited to see if this process gets automated in the future or if it’s already better and I’m missing something let me know!