Prompt Driven Development

The shift from syntax fluency to architectural understanding

I was invited by No-Code Exits to turn this article into a 30 minute lightning talk! Watch the presentation here:

I built an app over Thanksgiving, quickly gained 1,000 users, and monetized before Christmas.

The really wild part is... I did not personally write a single line of code. Instead I instructed LLMs at every step along the way and together we built a full stack NodeJS / React app with a PostgreSQL database, integrated with Stripe, hosted on Heroku, using SendGrid for email, and CloudFlare for DNS.

I have since been thinking very deeply about Prompt Driven Development, how to do it well, and what I think it means for the industry moving forward. Below are my honest, incomplete, and debatable thoughts. Please enjoy, feedback welcomed.

Outline

What is Prompt Driven Development (PDD)?

Tools and Workflows

Mental Models for success

Industry Implications

What is Prompt Driven Development (PDD)?

At its core, PDD is a development workflow in which the developer is primarily prompting an LLM to generate all the necessary code. Let’s contrast this with traditional development.

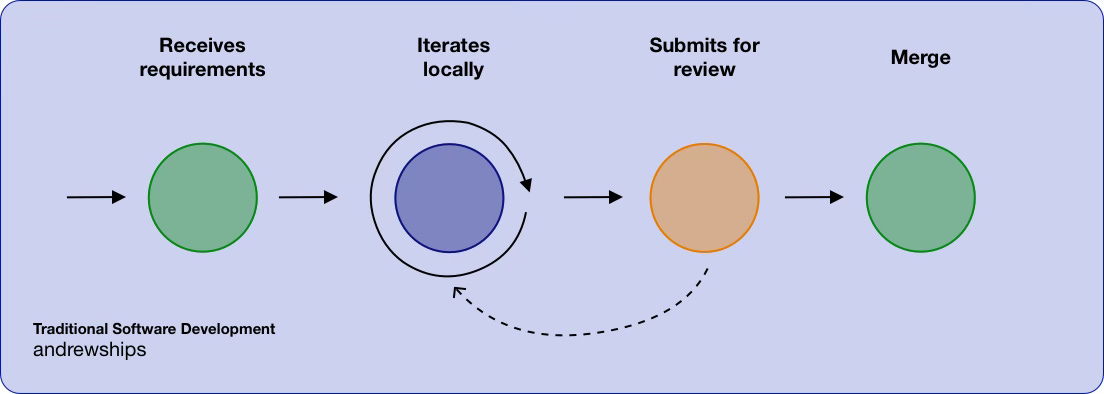

At a very high level, traditional software development typically looks something like this:

Developer receives requirements

Developer iterates on code changes locally in their IDE

Developer submits code changes for review

Another developer reviews and merges the changes

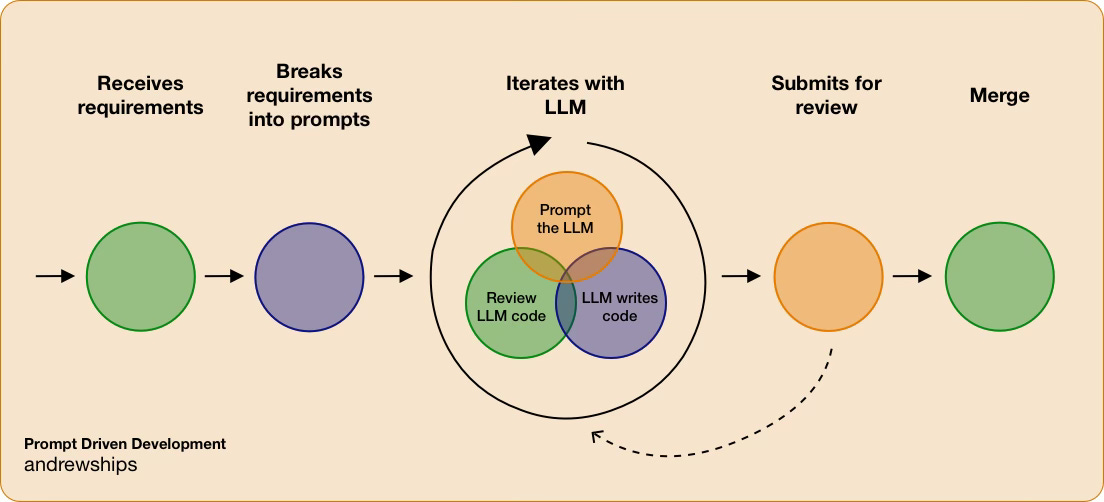

PDD has a few key differences. First, an LLM is present and writing most (if not all) of the code. Second, the developer is primarily prompting the LLM and reviewing its changes, rather than writing the code themself. Beyond that, the rest of the flow should more or less look the same.

High level, prompt driven development flow:

Developer receives requirements

Developer breaks requirements down into a series of prompts*

LLM generates code for each prompt

Developer reviews LLM generated code*

Developer submits all changes for review

Another developer reviews and merges changes

*I want to highlight a few steps.

"Breaking requirements into prompts" is a new skill that needs to be learned and practiced. I discuss ways to approach this in the Mental Models section.

"Reviewing LLM generated code" is critical. You wouldn't blindly merge human written code, so don't do that for LLM written code either!

Who is it for?

In short, everyone.

I talk to very senior engineers who have adopted this practice and are building incredibly innovative technologies. In particular one friend of mine created his own programming language from scratch using PDD. I also talk to non-technical individuals who are building fully functioning prototypes and some are even deploying to prod and attracting users. One of the many incredible things about LLMs is that they have significantly lowered the barrier to coding. People with no technical experience are now able to generate functioning code.

However, I do think there is a skillset that is uniquely positioned to benefit from this technique: individuals with technical experience who have transitioned into roles focused on product, strategy, or design. This would be folks like, technical product managers, technical designers, and engineering managers. This happens to be similar to my own background (dev > ux > pm), so I may be biased in my own excitement for PDD.

The reason this skillset is uniquely positioned to benefit from PDD is that these are individuals that likely have an architectural understanding of how software works, but have not retained fluency over programming syntax. Because LLMs handle the syntax for you, that lack of fluency is no longer a constraint. Through PDD they can build effectively and unencumbered.

Tools and Workflows

Foundational Models

These are the LLMs that will be writing your code. This is an incredibly active and dynamic space as new models are trained and deployed to the market. For the purpose of PDD, here's the main points that matter:

I'm typically using Claude 3.5 Sonnet, GPT4o, and GPTo1

There's tons of data benchmarking the coding abilities of various models. Refer to the latest benchmarks when selecting a model

Chats

ChatGPT, Claude, Gemini

Regular ol' Chats are an option for PDD. Personally, I prefer an LLM-Native IDE, but several developers I've talked to use Chats instead for their simplicity. It's a bit more manual work to provide all the right context to the LLM, and then to paste the output into your codebase, but it can work just fine.

The workflow would look like this:

Write your prompt for the LLM

Copy all the necessary code context from your codebase and include it as part of the prompt

Copy the code generated by the LLM

Paste the code output into the right place in your codebase

I find this doesn't work for me as a primary workflow, but I do use Chats occasionally during PDD. If you're going to try this out, definitely use Claude Projects or ChatGPT Projects. These features allow you to organize files and chats together, which will help tremendously while providing code context to the LLM.

LLM-Native IDEs

Cursor, Windsurf, Bolt, v0, Replit

These tools are a total game changer. They are IDEs that give you access to LLMs within the window of the IDE. They have two huge advantages over using a Chat to generate code:

It's super easy to provide code context to the LLM

The LLM is able to edit your files directly in the IDE

This makes for a significantly faster feedback loop while generating code because you don't have to copy+paste code context into a Chat, and then copy+paste the output into your IDE. Those steps just disappear.

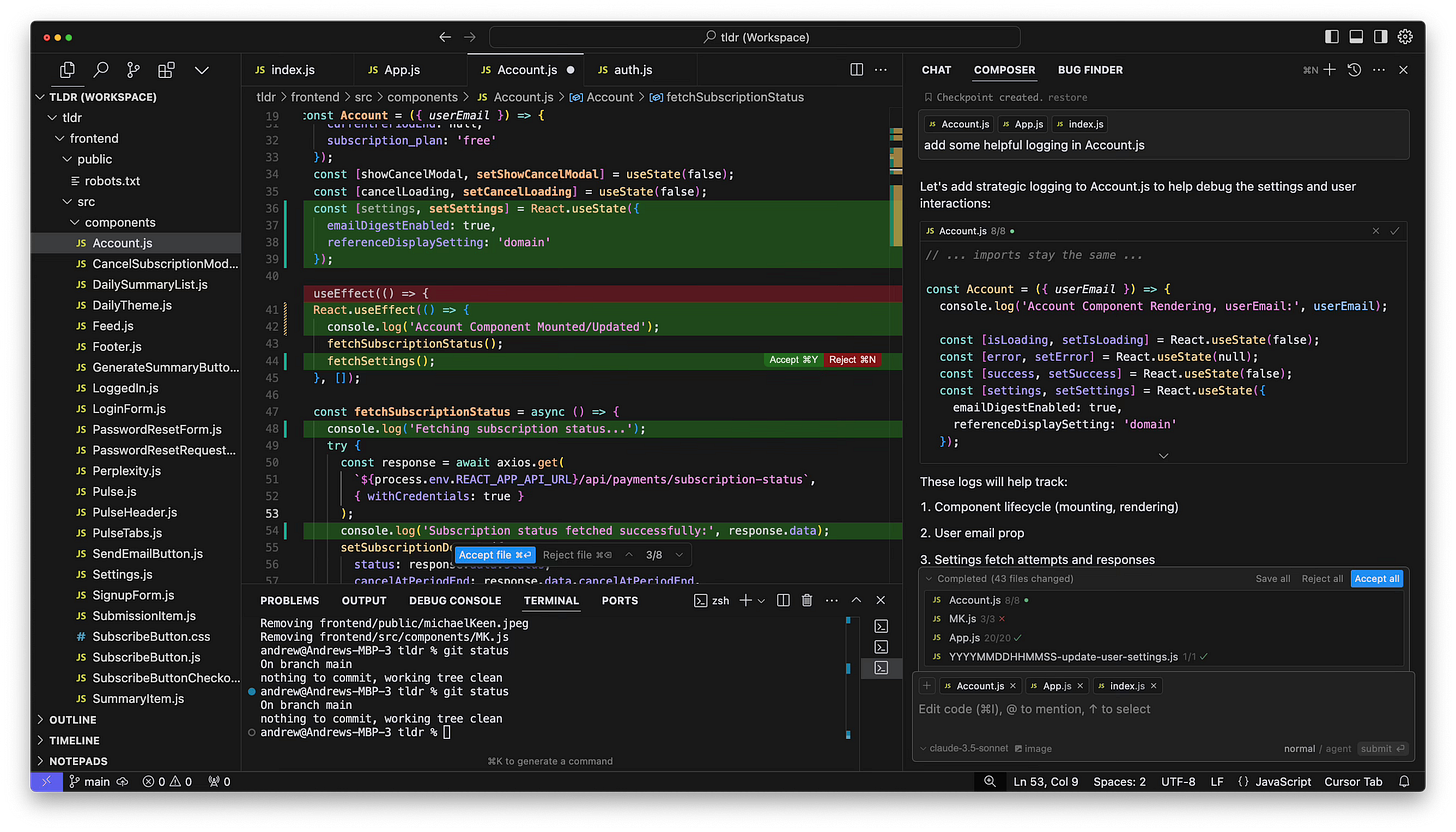

There are many of these tools and more popping up every few months it seems. Personally, I use Cursor. My honest take on all of these tools is that they are more or less the same. There's nuances, like Replit will deploy your codebase for you and Windsurf will connect with GitHub for you. But by and large, the primary value they all provide is a streamlined UX for PDD.

I strongly recommend picking an LLM-Native IDE and sticking with it. The switching cost between the various options is super low. The skill you want to develop is how to write excellent prompts, not memorizing all the features of a particular IDE. So pick one, stick with it, and focus on your prompting.

My Workflow

I use a combination of ChatGPT and Cursor in my workflow. Here's a rough breakdown:

80% of the time I'm iterating back-and-forth with Cursor. I prompt Claude (via Cursor's Composer feature) to make changes. I review them, accept or reject. Repeat. If there's a bug, we work on it together to get it resolved.

15% of the time, I enlist GPT4o to act as my technical thought partner. This is when I am making a more complex change or building something that is new to me. I will ask GPT4o to propose a technical approach as well as write a few tickets for me that I can use as prompts. I then enter the standard loop with Cursor.

5% of the time, I enlist GPTo1 for it's powerful reasoning abilities. This is when I am either starting a new project or making a long series of complex changes. This is similar to working with GPT4o, however o1 will produce higher quality and longer output. While I might ask 4o to generate 3 tickets for me, I'll ask o1 to generate over a dozen tickets for me. It's really able to plan out the entire technical approach to a project or major feature.

Mental Models for Success

Better prompting == better LLM generated code. Garbage in, garbage out, right? Everyone's goals, environment, and skillsets are different. So instead of telling you exactly how to write you prompts, here's a few mental models that will improve your prompt quality and, in turn, improve the quality of your generated code.

The LLM is a junior dev who's new to the project

LLMs have incredible coding abilities (see benchmarks above). However, if you're new to PDD, I highly recommend adopting this idea first. Tell yourself the LLM is a junior level developer who's brand new to the project. When you're crafting your prompt, think about how you might instruct this type of individual. You wouldn't say something like, "build me an app that lets friends post pictures and follow each other." That would be an insane approach to guiding a new developer, and they would absolutely fail.

Instead, you would give them a very small, very specific change to make. And you would probably point them to all the right files, as well as tell them a whole bunch about what the product is, and how people use it. This is the right way to think about prompting an LLM for code generation. Not only will it produce better results, it will also force you to get better at prompting, which is really what you want to be focused on.

Better prompting == better LLM generated code. Garbage in, garbage out, right?

Act as if you're the entire product team

Don't limit yourself to providing technical context for the LLM. Think about the input that the Product Manager, Designer, Dev Lead, and QA Engineer provide for a scope of work. You want to be emulating this as much as possible, without over investing.

In addition to technical direction, you should also be providing:

User stories

Business goals

UI description

UX flow description

Testing plans

Adding these types of notes into your prompts will give the LLM much more well-rounded context and increase its ability to accomplish your desired goals.

Context, Specificity, and Scope

These are the three variables you want to be considering as you tune your own prompting. The relationship between these variables and code quality is straight forward. To improve the quality of the LLM generated code, you need to:

Increase the amount of context you provide

Increase the specificity of your request

Narrow the scope of the change

For the best output you want HIGH context, HIGH specificity, and NARROW scope

Here's how I define these terms…

Context is the snippets or files from your codebase you provide in your prompt.

Low context:

"Make these changes on the back-end"High context:

"Adjust the getMarker function in the backend/routes/user.js file. And here is that file, plus 3 more files it commonly interacts with..."

Specificity is how clearly defined and precise your ask is.

Low specificity:

"When a user clicks submit, add their submission to the feed."High specificity:

"When a user clicks submit, call the saveSubmission endpoint to store the submission in the database. Once saved, automatically call the loadFeed function to refresh the feed on the frontend so that the user is able to see their submission immediately, without having to reload the page."

Scope is how much we are asking the LLM to change at once.

Broad scope:

"Add a settings page where the user can manage and update their account."Narrow scope:

"Add a toggle on the settings page where the user can set their account to private or public."

So remember, for the best output you want HIGH context, HIGH specificity, and NARROW scope. Yes this takes time to write, but so does code. You'll have to find the right balance that makes sense for your own skillset.

LLMs write bugs (just like humans)

Guess what, LLMs aren't perfect. I talk to people who tried PDD once "but it hallucinated a bug so I stopped." I would recommend curbing your expectations, and just know that the LLM will generate a bug here and there. That is part of the normal development process, even when humans are writing the code.

This is also why it's so important to review and test the LLM's code before accepting it. Don't blindly merge the changes it makes. You wouldn't do that with human written code, so why would you do that with LLM written code? If you're encountering bugs more than ~5% of the time, revisit your prompting technique using the suggestions above.

You wouldn't blindly merge human written code, so why would you do that with LLM written code?

Industry Implications

The bar has been raised

One common mistake is to look at this tech and think that we don't need developers anymore. I think that's the wrong way to think about LLM generated code. Instead, what's really happened is that the bar has been raised for everyone in the industry.

For technical people, being fluent in a programming language is no longer enough. Because that skill can be 100% outsourced to an LLM. Now developers need to have a deep architectural understanding of the codebase they're working in, and they need to have strong writing skills so that they can articulate the desired changes to the LLM with precision.

For non-technical people, being able to write requirements for a dev team is no longer enough. Instead they need to be able to build fully functioning prototypes that run locally. And to do that, they need enough technical understanding to be able to describe to the LLM what is needed, and know how to spin up a local web server.

Levels won't disappear, but they will change

There's been a lot of talk lately about the entry (or even mid) level developer role being replace by AI. I understand what these people are trying to say, but I think that's a false claim. What they really mean is that LLMs are now capable of doing the work that we currently ascribe to an entry or mid level developer. That, I tend to agree with. But the idea that those roles disappear is missing the forrest for the trees.

Eliminating entry and mid-level roles would dramatically disrupt the developer talent pipeline. It's a recipe for population collapse. Eventually the people who are now senior developers will retire, and we won't have been able to replace them at a quick enough rate because there is no talent pipeline.

Instead, what will 100% happen is that the definition of entry and mid level developer will change. The types of tasks they are expected to do will become more sophisticated. They will likely be using LLMs to generate a lot of their code. And they will be expected, again, to have a deep architectural understanding of their product as well as strong written communication skills.

Learning software development will look different

When I learned software development in the early 2010's I was taught concepts in roughly this order:

Basic concepts (variables, data structures, etc.)

Language syntaxes and features (I learned C++, Java, LISP, Ruby, JavaScript)

Collaboration (Git)

Architectural patterns (Front/Back End, MVC)

...and then my career pivoted into Product

I anticipate a couple changes for people learning software development now. First, they won't learn 5 programming languages like I did. There's no reason to do that. They'll probably learn one, and then rely on LLMs for other languages and frameworks. Second, they'll start learning architectural patterns earlier, probably at the same time as learning basic concepts. This is because you have to have this knowledge to write quality prompts. Third, they'll learn to work with LLMs from day one and, in turn, hone very strong written communication skills for quality prompting.

It'll look something like this:

Basic concepts + Architectural patterns

One programming language + Prompt writing (probably python or javascript, and they'll learn through prompting)

Collaboration

...and so on

Smaller, newer companies will adopt Prompt Driven Development first

Change is hard and organizational inertia will stand in the way of large, established technology companies from adopting these practices at scale. It will take much longer for them to collectively build trust in the LLM's code quality, understand that prompt quality is the more important factor, not feel threatened by the LLMs, and ultimately shift into adopting these tools.

Small, newer companies have every reason to be AI-native from day one, and no reason not to be. They will adopt these practices with ease, quickly learn how to integrate them into their own unique culture, and ship at rates never before seen in the industry. This is happening today with companies like Headstart (NYC based software firm) leading as an example.

Conclusion

Prompt-Driven Development (PDD) represents a transformative shift in software creation, emphasizing architectural understanding over syntax fluency. By leveraging LLMs, developers and non-developers alike can build functional products faster and more efficiently. However, the quality of LLM-generated code depends heavily on the clarity and specificity of prompts, making communication and strategic thinking critical skills.

We need more voices talking about this, from both technical and non-technical communities. I'd love to see senior developers sharing their thoughts and experiences. I'd also love to see designers or entrepreneurs who have never coded sharing their thoughts. If anyone wants to talk or collaborate around these ideas, feel free to reach out in one of the channels below.

The wildest thing is that this is literally a playbook for people to create multiple streams of SaaS income and probably like 1 out of 200 readers will even open Cursor to try lmao, so much value here, so wild

Thanks for sharing, this is an awesome post. As a senior developer, I’m trying to learn how to adopt an AI workflow for my personal projects. It’s tough because my day job is resistant to using AI because of data privacy.